Changing the Game in Esports Research: Registered Reports at the International Journal of Esports

by Peter Etchells*Email: p.etchells@bathspa.ac.uk

Received: 25 Jan 2021 / Published: 05 Apr 2021

Video games research, from a psychological perspective, has endured a controversial and contentious history. In part, this has been due to the inherently complex nature of the questions being asked, coupled with the perception – both public and academic, that video games are a ‘lesser’ form of entertainment, and that research concerning their effects must therefore necessarily be regarded as ‘soft’ science[1]. The reality, though, is much the opposite: it is not a trivial task to study video game play, either in the lab or observationally. A classic example of this disconnect can be found in the large body of literature concerning the behavioural and wellbeing effects of playing so-called violent video games. Where early research seemed to suggest consistent causal links between playing such games and aggressive behaviours[2], it became increasingly apparent that the way researchers measure aggression is vulnerable[3] to Questionable Research Practices (QRPs) such as selective reporting[4], HARKing (or ‘Hypothesising After the Results are Known’)[5] and withholding data from public scrutiny[6]. For example, research by Elson and colleagues[7] has recently shown extreme flexibility in the application and interpretation of one of the most commonly used measures of aggression in video games research, the competitive reaction time task. Without carefully considering analysis pipelines before they are implemented, this work showed that it is possible to produce evidence suggesting that violent video games either cause aggression, or do not, using exactly the same data. As research in this particular area has progressed and improved, we have started to see a different picture emerge. Studies which adhere to the best practice principles of open science[8] are able to protect themselves against many of the QRPs present in the existing literature, and tend to show that the link between violent video games and aggression is weak at best, with effect sizes so small as to not worry about the relationship[9,10]. Nevertheless, the public debate around violent video games has fairly consistently remained at a surface level of discourse, with the assumption that they inherently and solely result in problematic behaviour being the dominant frame in which research is presented[11].

While QRPs such as those mentioned above have been well-known issues within the academic community for well over 50 years, it has only really been since the early 2010s that the extent to which these practices cause problems in research has been fully revealed. For example, work by John, Loewenstein and Prelec [12] has suggested that approximately 78% of psychologists fail to report all dependent measures within their studies, 67% selectively report studies that show positive results, 72% go on to collect further data after checking for significance, and, perhaps most worryingly, 9% falsify their data. Such findings, and others, have more recently spurred large-scale international efforts to determine the extent to which psychological research is replicable, often with similarly concerning results: The Many Labs 2 project [13], a collaboration of some 200 psychologists, showed that of 28 contemporary and classic studies in the research literature, only the results from 14 were replicated.

The reasons for this crisis in psychological research are manifold, but at their essence, they lie in the incentive structures that have been built into the way that science is conducted and disseminated[14]. Historically, ‘success’ in academic publishing has been marked by an ability to produce novel, eye-catching results – journals are more likely to send papers to peer review (and in turn publish) if they are viewed as having made critical new discoveries, as opposed to incrementing our knowledge on a particular topic. Coupled with the perception that academics must ‘publish or perish’, it has led to an environment in which researchers are incentivised to manipulate data and hypotheses into a more enticing and publishable story, consign negative findings and replications to the file drawer[15], and focus on irrelevant metrics, such as impact factors[16] and citation rates[17] as markers of success. In doing so, science becomes damaged: the ability to understand the world around us in a meaningful and robust way is lost.

The reason that I focus on psychology here, and specifically the violent video game literature, is that it represents a cautionary tale of how, if researchers do not take a principled and robust approach to conducting research in relatively new areas, we risk wasting a significant amount of research time in cul-de-sacs of ideas. In turn, such an approach can easily derail our ability to successfully communicate the balance of benefits, risks and opportunities of engaging with games to a mass audience. Over the past thirty or so years, the psychological study of video games has been dominated by the two areas of violent video games effects and gaming addiction, and while such research does have merit, it also represents a tremendous loss in terms of missed avenues of inquiry. Fundamental questions such as why individuals play video games, or how different gaming environments or competition can impact behaviour, still remain relatively understudied. With esports taking an increasingly prominent role in video game culture, it is important that we learn lessons from past mistakes, ensuring that this burgeoning research field avoids becoming another failed avenue of meaningless results.

Introducing Registered Reports

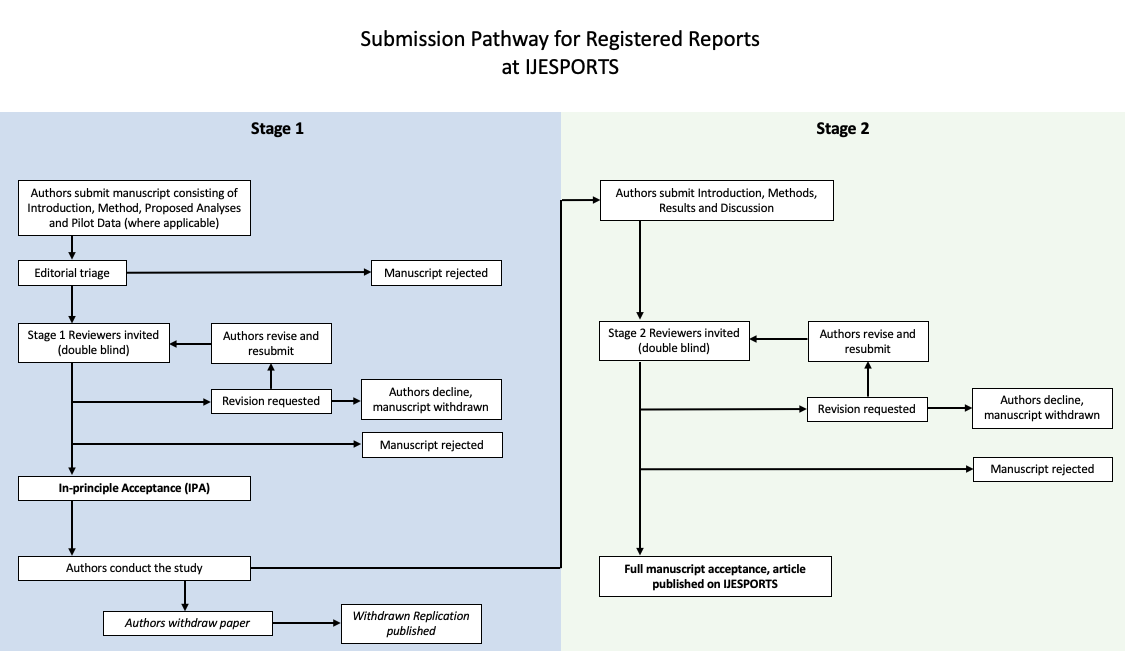

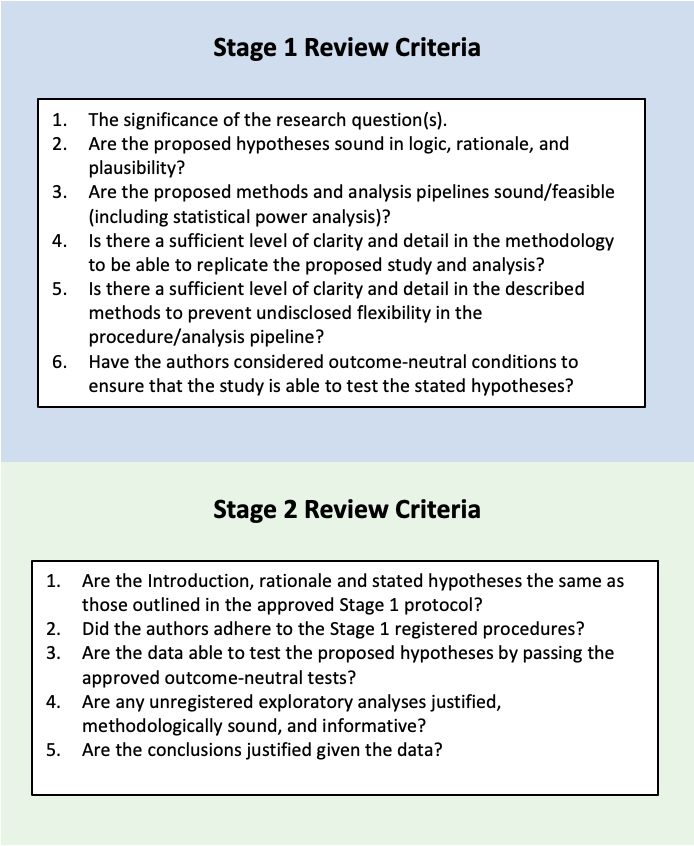

Thankfully, the story isn’t wholly negative. As a result of the prominent and public story of the replication crisis in psychological science, the discipline has led the charge in instigating revolutionary transformations to improve the way that we conduct, report and access research. One of the most powerful tools that has been developed in the drive to protect ourselves against some of the more egregious QRPs is a type of journal article format called the Registered Report[18]. The format, first adopted by the journal Cortex in 2013, requires authors to formally pre-designate their rationale, hypotheses, proposed methods and analysis pipelines before they begin to collect any data. This preregistration document is then sent to a journal and subject to peer review (Figure 1). Critically, this means that at this first review stage, editors and reviewers are able to make assessments regarding the soundness of the rationale, feasibility of the proposed methods, and appropriateness of the proposed analyses, in the absence of any results, the direction of which may often cloud judgement regarding the significance of the research. Additionally, reviewers are able to make meaningful suggestions that can improve the proposed research before it has actually taken place (Figure 2), as opposed to more traditional models of peer review in which criticisms come in after the fact.

If the manuscript passes Stage 1, the journal can then offer ‘in principle acceptance’ (IPA), which essentially guarantees that, as long as they adhere to the preregistration protocol, the final study will be published regardless of the outcome of the results. If IPA has been granted, the authors can now proceed to data collection, and once complete, submit a Stage 2 manuscript which consists of the original Introduction and Methods sections from Stage 1, plus new Results and Discussion sections. The Results section must include all of the analyses that were preregistered, but it can also include additional analyses that the authors may have developed during the course of data collection; they simply need to disclose that these analyses were unregistered by reporting them separately in an ‘Exploratory Analyses’ subsection. The data associated with the study must be openly available, ideally alongside any supporting experimental code, material or analysis scripts. If the manuscript meets all of these criteria, it is then published.

The Registered Report format thus provides a level of inoculation against QRPs such as publication bias, p-hacking, HARKing and closed data[19]. While not all research can or should necessarily fit within the Registered Report mould, we are excited at IJESPORTS to be offering a powerful route through which we can start to realign the incentives within academic publishing. This is all the more critical when it comes to emerging and rapidly evolving areas of inquiry such as esports, wherein theoretical frameworks, methodologies and analytical approaches are in their relative infancy. Registered Reports offer academics interested in the study of video games a chance to change the discipline for the better, and esports research is perfectly placed to lead the charge.

Figure 1: Overview of submission pathway for Registered Reports (adapted from Chambers et al.

[14])

Figure 2: Review criteria for Stage 1 and Stage 2 Registered Reports (adapted from Chambers et

al. [14])

References

- Etchells PJ. Five damaging myths about video games – let’s shoot ‘em up. Guardian. 2020. Available from: https://www.theguardian.com/games/2019/apr/06/fivedamaging-myths-about-video-games-lets-shoot-em-up

- Ferguson CJ, Kilburn J. Much Ado About Nothing: The Misestimation and Overinterpretation of Violent Video Game Effects in Eastern and Western Nations: Comment on Anderson et al. (2010). Psychological Bulletin. 2010; 136(2):174-178

- Etchells PJ, Chambers CD. Violent video games research: Consensus or confusion? Guardian. 2014. Available from: https://www.theguardian.com/science/headquarters/2014/oct/10/violent-video-games-research-consensus-or-confusion

- Simmons JP, Nelson LD, Simonsohn U. False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science. 2011; 22:359-366

- Kerr NL. HARKing: hypothesizing after the results are known. Personality and Social Psychology Review. 1998; 2:196-217

- Wicherts JM, Bakker M, Molenaar D. Willingness to share research data is related to the strength of the evidence and the quality of reporting of statistical results. PLoS One. 2011; 6:e26828

- Elson M, Mohseni MR, Breuer J, Scharkow M, Quandt T. Press CRTT to measure aggressive behavior: The unstandardized use of the competitive reaction time task in aggression research. Psychological Assessment. 2014; 26(2):419–432

- Nosek BA, Alter G, Banks GC, et al. Promoting an open research culture. Science. 2015; 348(6242):1422-5

- Przybylski AK, Weinstein N. Violent video game engagement is not associated with adolescents' aggressive behaviour: evidence from a registered report. Royal Society Open Science. 2019; 6(2): 171474

- Ferguson CJ, John Wang CK. Aggressive Video Games Are Not a Risk Factor for Mental Health Problems in Youth: A Longitudinal Study. Cyberpsychology, Behavior and Social Networking. 2021; 24(1):70-73

- Goodson S, Turner KJ. Effects of violent video games: 50 years on, where are we now? Cyberpsychology, Behavior and Social Networking. 2021; 24(1): 3-4

- John LK, Loewenstein G, Prelec D. Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science. 2014; 23:524-532

- Klein RA, Vianello M, Hasselman F, et al. Many Labs 2: Investigating Variation in Replicability Across Samples and Settings. Advances in Methods and Practices in Psychological Science. 2018; 1(4):443-490

- Chambers CD, Feredoes E, Muthukumaraswamy SD, Etchells PJ. Instead of "playing the game" it is time to change the rules: Registered Reports at AIMS Neuroscience and beyond. AIMS Neuroscience. 2014;1(1): 4-17

- Faneli D. “Positive” Results Increase Down the Hierarchy of the Sciences. PLos One. 2010; 5: e10068

- Brembs B, Button K, Munafo M. Deep impact: unintended consequences of journal rank. Frontiers in Human Neuroscience. 2013; 7:291

- Bishop D. 'Percent by most prolific' author score: a red flag for possible editorial bias. 2020. Available at: http://deevybee.blogspot.com/2020/07/percent-by-most-prolificauthor-score.html

- Chambers CD. (2013) Registered reports: a new publishing initiative at Cortex. Cortex. 2013; 49:609-610

- Chambers CD, Tzavella L. Registered Reports: Past, Present and Future. Metaarxiv. 2020, doi:10.31222/osf.io/43298